Activities

Data-centric AI

Data-centric AI is defined as "the discipline of systematically engineering the data needed to build a successful AI system." and concerns with real-world data that are unstructured, often incomplete/limited in number, and partially inconsistent. Starting from real-world case studies and datasets coming different application contexts such as clinical medicine, human-resource management, industrial production, aso, our objectives is to study specific data-preparation and AI methodologies for processing data in-the-wild, making AI performance adequate and stable in these challenging contexts, and to study AI solutions meeting the specific requirements arising in the involved application-contexts.

Funded Projects

WASABI: White-label shop for digital intelligent assistance and human-AI collaboration in manufacturingHybridAI: an hybrid approach to Natural Language Understanding

Work Datafication and Behavioral Visibility in The Digital Workplace

Assessment of well-being state in Post-acute COVID Syndrome: an international multicentre prospective cohort study

Machine Learning to Operationalize WG definition and Generate a Personalized Phenotypic Characterization in PLWHIV

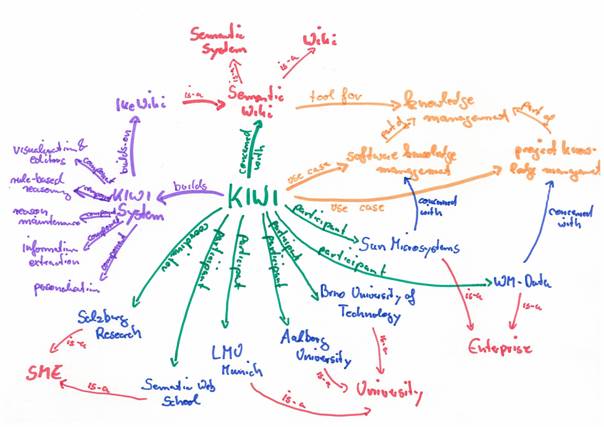

Information sharing, interoperability and Semantic Web

The ever-growing and widespread availability of data from Internet information sources has placed great interest on the potential of information sharing and interoperability. In line with this view, the Semantic Web aims at converting the current web, dominated by unstructured and semi-structured documents into a "web of data". The need to complement the Web with semantics has spurred efforts toward a rich representation of data, giving rise to the widespread use of ontologies, XML schemas, and RDF schemas.

Our work in this field is concerned with the sharing and interoperability of heterogeneous and distributed data sources and is mainly focused on the Peer-to-Peer (P2P) paradigm and its evolutions toward dataspaces. We propose techniques for creating effective Peer Data Management Systems (PDMSs) with limited information loss and solutions for query reformulation and processing and query routing. We consider various application contexts ranging from digital libraries to business intelligence.

Real-time query processing for data streams

We study solutions for an efficient management of data streams including sensor data, RFID data, data coming from OnBoardUnits (OBUs) aso. We are especially interested in developing techniques for real-time query processing in the context of workload-intensive applications. We are also concerned with the specificities of the different kinds of streaming data as RFID data which are dealt with as probabilistic data or OBU data that are spatio-temporal data. This activity was partially funded by the PEGASUS project.

Past Activities

Approximate search in non-conventional data

This is one of the ISGroup's core activities. We focus on models, algorithms and data structures for approximate searches in non-conventional data such as graphs, semi-structured data and plain text as well as in non-conventional contexts such as heterogeneous information settings. Our main concern is how to adapt user needs to the information of interest when the latter does not match exactly and how to rank results. Approximate search approaches were studied, for instance, in the context of distributed digital libraries, P2P, plagiarism detection and machine translation, and for RDF, XML and plain text.

Funded Projects

Facit SMENeP4B: Networked Peers for Business

WISDOM: Web Intelligent Search based on DOMain ontologies

DELOS – A Network of Excellence on Digital Libraries

Semantic Web techniques for the management of digital identity and the access to norms

La dinamica della norma nel tempo: aspetti giuridici ed informatici

Technologies and Services for Enhanced Content Delivery (ECD)

Data Management in Intelligent Transportation Systems

We study solutions for the management of data in the context of Intelligent Transportation Systems (ITS). In particular, we cope with the technical challenges of devising a GIS Data Stream Management System (DSMS) providing reliable and timely traffic information. The final aim is the development of an infotelematics infrastructure providing various services to improve the safety and efficiency of vehicles’ and goods’ flows.

Biological Data Source Interoperability

This activity focuses on enabling the interoperability among different biological data sources and for ultimately supporting expert users in the complex process of querying the precious knowledge hidden in a such huge quantity of data, thus obtaining useful results. A part of this activity is carried out in collaboration with Prof. Silvio Bicciato and Dott. Cristian Taccioli.

Multiversion Data Management

The need of maintaining and accessing multiple versions of data is evident in different application scenarios. For instance, in the legal domain laws evolve over time and accessing past versions is necessary for various purposes while in a e-government scenario citizens would like to retrieve personalized norm versions only containing provisions which are applicable to their personal case. Similar needs arose in the medical context.

We study models and technologies for the management and access of multi-versioned XML data in all these contexts.

Business Intelligent Network

One of the key features of BI 2.0 is the ability to become collaborative and extend the decision- making process beyond the boundaries of a single company. However, Business intelligence (BI) platforms are aimed at serving individual companies, and they cannot operate over networks of companies characterized by an organizational, lexical, and semantic heterogeneity.

As a joint work with Prof. Stefano Rizzi and Prof. Matteo Golfarelli, we propose a framework, called Business Intelligence Network (BIN), for sharing BI functionalities over complex networks of companies that are chasing mutual advantages through the sharing of strategic information. In this context, we provide solutions for mapping specifications through an effective query language and for query processing, thus addressing the problem of query reformulation for aggregate queries.

Structural Information Disambiguation

Structural disambiguation aims at making explicit the meaning of the terms used to describe the portion of the real world of interest in web directories, ontologies, relational data, XML and so on.

We study versatile disambiguation approaches and experiment them on real world setting. STRIDER is the name of the system we implemented in this context.

Semantic Knowledge Filtering in a Software Development Scenario

Our research activity concerns the use of semantic techniques in order to facilitate the process of software development in Small and Medium Enterprises (SME). In particular, we study algorithms for the automatic extraction of a semantic glossary of terms (keywords) from textual descriptions of various elements of the project knowledge backbone, the ORM model, such as software methodologies and quality requirements. From this, we apply semantic similarity techniques between sets of keywords in order to support automatic matching processes between requirements and methodologies, and filtering / searching of the available elements in the model based on specific targets or business methods.

Efficient search in semi-structured data

In collaboration with Prof. Pavel Zezula we studied twig query processing techniques, both for ordered and unordered matching. The proposed solution adopts the pre/post numbering scheme to index tree nodes and holistic approaches for all kinds of matching. A well-known and widespread context of application for these kinds of techniques is XML data. The final results of this activity were published in the VLDB Journal.

Example Based Machine Translation

EBMT (Example Based Machine Translation) is one of the most promising solutions to automate translation. It essentially uses examples of past translations to help to translate other, similar source-language sentences into the target language. In this way, it aims at achieving better quality and quantity in less time, while preserving and treasuring the richness and accuracy that only human translation can achieve.

In collaboration with LOGOS Group, we introduced a novel approach to propose effective translation suggestions the field of EBMT. It applies approximate matching techniques are applied to sentences, also considering additional problems related to multi-linguism. The approach was implemented in a system named EXTRA. We conducted an extensive evaluation of EXTRA and a comparison between the results offered by our system and the major commercially available systems.

Schema Evolution and Versioning

The problems of schema evolution and versioning arose in the context of long-lived database applications, where stored data were considered worth surviving changes in the database schema. A database supports schema evolution if it permits modifications of the schema without the loss of extant data; in addition, it supports schema versioning if it allows the querying of all data through user-definable version interfaces. With schema versioning, different schemata can be identified and selected by means of a suitable “coordinate system”: symbolic labels are often used in design systems to this purpose, whereas proper time values are the elective choice for temporal applications.

Our work on this topic was mainly focused on object-oriented databases. Most activities were conducted during Federica's PhD period at the University of Bologna (supervisors: Prof. Maria Rita Scalas and Prof. Fabio Grandi) and in collaboration with Prof. John Roddick and Prof. Enrico Franconi .